When it comes to small, complex datasets—think rare diseases, early-stage clinical trials, or finely tuned molecular design—classical machine learning methods often struggle. Overfitting, limited accuracy, and high variability make extracting valuable insights a challenge. A recent study presented by a collaborative team from Merck, Amgen, Deloitte, and QuEra explores a promising alternative: quantum reservoir computing (QRC). Below is a summary of why they chose this approach, how they applied it, and the results they observed. See the arXiv paper detailing the study and the recording of our webinar covering it.

1. The Motivation

Small-Data Problem

In industries like biopharma, oncology, and personalized medicine, data is often scarce. Traditional machine learning models can overfit quickly on small datasets (e.g., 100–300 samples), and their predictive performance deteriorates on new, unseen data. Moreover, the variability of their performance across multiple data splits can be prohibitively high.

Why Quantum?

The team sought a quantum-based method to address these challenges—particularly with data riddled with complex correlations and nonlinearity. Quantum reservoir computing offered a compelling solution because:

- It doesn’t rely on large-scale training (in contrast to many variational quantum algorithms).

- It can handle nonlinear dynamics well, drawing on the rich interactions within the quantum hardware.

- It holds the promise of scalability, especially important as quantum devices grow in qubit count.

Focusing on Molecular Properties

Molecular property prediction is a natural test case for small-sample research: drug discovery pipelines, for example, often produce datasets that are too limited for standard machine learning techniques. By showcasing results on a molecular dataset, the group aimed to illustrate how QRC could generalize to other small-data challenges in pharmaceuticals, healthcare, and beyond.

2. The Process

Neutral-Atom Hardware and Reservoir Computing

QuEra’s neutral-atom quantum hardware provides a highly scalable platform where individual atoms act as qubits. Unlike some quantum technologies, neutral-atom systems can potentially reach tens or even hundreds of thousands of qubits without the complexity of massive wiring or cryogenic refrigeration. This is key for reservoir computing, where the “reservoir” is a physical system through which data is passed to generate richer feature representations (called embeddings).

Embedding Workflow

1. Data Preprocessing and Encoding

Small, high-value datasets (e.g., molecular properties) are cleaned, clustered, or reduced to ensure they capture the essential features.

The numerical values are then encoded into the quantum computer. For neutral-atom hardware, data can be embedded by adjusting local parameters (e.g., atom positions, pulse strengths).

2. Quantum Evolution

Once the data is encoded, the atoms undergo quantum dynamics. Because the system is analog, these interactions occur naturally, generating complex, nonlinear transformations without requiring heavy parameter optimization.

3. Measurement and Embedding Extraction

The quantum states are measured multiple times. These measurement outcomes form a new set of “quantum-processed” features—often showing patterns difficult to replicate with purely classical methods.

4. Classical Post-Processing

Rather than train the quantum system itself, the team trains a classical model (such as a random forest) on these quantum-derived embeddings. The process circumvents some known challenges in hybrid quantum-classical training—like vanishing gradients—by limiting training to the classical side.

Comparisons to Classical Methods

To ensure robustness, the study compared:

- Raw Data fed into a variety of classical machine learning models.

- Classical Embeddings (e.g., standard kernel methods like Gaussian processes).

- Quantum Reservoir Embeddings from the neutral-atom system.

The team also ran experiments for multiple dataset sizes—ranging from about 100 records to several hundred—to simulate the real-world progression from “small data” to more mature data samples.

3. The Results

Strong Performance on Small Data

For datasets containing 100–200 samples, the QRC-based approach consistently outperformed purely classical methods. This mattered in two critical ways:

- Higher Accuracy: Predictions of molecular properties were notably more accurate with quantum-derived embeddings.

- Lower Variability: Performance across different train-test splits was more stable, a major concern when data volume is limited.

Convergence with Larger Datasets

As the number of samples rose (e.g., 800+), the gap between quantum and classical methods narrowed. In other words, with more data, conventional machine learning caught up. However, the quantum approach demonstrated an edge in “learning more” from fewer data points—an advantage that could be transformational in early-stage or niche applications where data is, by nature, limited.

Interpretable Embeddings

Visualizations using techniques like UMAP underscored why the QRC approach can excel. The quantum embeddings often formed more distinct clusters, revealing clearer, more separated patterns in the data—essential for both classification and regression tasks.

Hardware Scalability

In test experiments, the QRC method scaled up to over 100 qubits on QuEra’s hardware—among the largest quantum machine learning demonstrations published so far. As hardware capacity grows, the team expects further gains in addressing complex, high-dimensional problems that classical simulators cannot easily replicate.

Future Outlook

Beyond Molecular Properties

The team believes QRC can be extended to several other arenas:

- Clinical Trials: Particularly in rare diseases or early-phase trials with very few patient samples.

- Anomaly Detection: Nonlinearities and small volumes make quantum approaches appealing for healthcare data (like ECG or voice analysis).

- Large Language Models (LLMs): Some early research indicates that quantum methods could enhance attention mechanisms or guide prompt engineering in AI.

- Time-Series Prediction: Audio signals, EKG readings, and real-world sensor data often have hidden correlations that QRC might capture more elegantly than classical techniques.

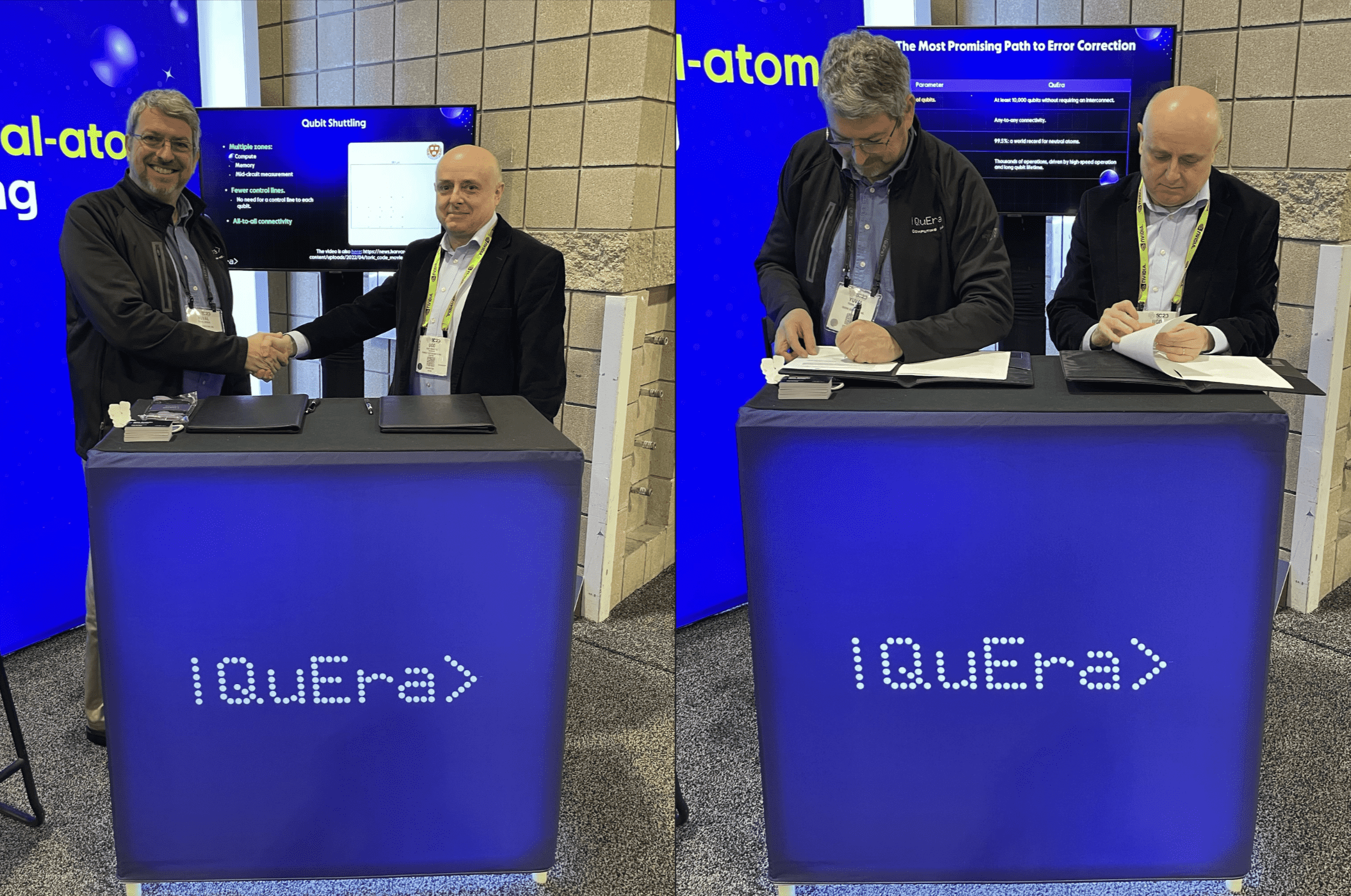

Collaboration Is Key

This project exemplifies how deep partnerships among quantum hardware developers, pharmaceutical experts, and data science teams can break new ground. Real-world data, specialized domain knowledge, and quantum engineering expertise come together to tackle problems that simply weren’t tractable a few years ago.

Conclusion

This collaborative study underscores the emerging role of quantum reservoir computing in unlocking value from small but critical datasets. By harnessing the inherent dynamics of neutral-atom quantum systems, the team showed improved predictive performance, especially where classical machine learning tends to falter—namely, in low-sample, high-complexity scenarios.

While classical methods remain competitive for large datasets, quantum reservoir computing demonstrates a powerful new capability to “do more with less.” As the technology scales, the research community will likely uncover even more applications where QRC can deliver unique advantages—accelerating discoveries and innovations in pharmaceuticals, healthcare, and beyond.

.webp)